RAG: The AI Superpower You Didn’t Know You Needed (But Definitely Do!)

Ever wonder how AI chatbots sometimes sound like they know everything, even stuff that happened just yesterday? Or how they can answer super specific questions about obscure topics? Well, often, it’s not magic — it’s Retrieval-Augmented Generation, or RAG. Think of RAG as giving an AI a super-smart research assistant that can instantly look up information and use it to give you the best answer. It’s a game-changer, and here’s why it’s so cool.

What Exactly Is RAG?

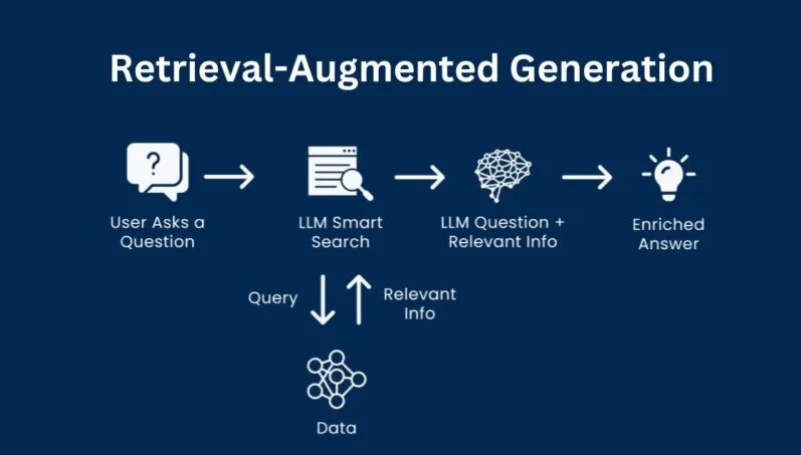

Imagine a brilliant student who has a massive library at their fingertips. When you ask them a question, they don’t just guess based on what they’ve memorized. Instead, they quickly search the library, pull out the most relevant books or articles, read through them, and then give you a really well-informed answer. That’s RAG in a nutshell!

Traditional AI models (Large Language Models or LLMs) are like that brilliant student who only relies on what they’ve learned during their “training” — which can be a bit outdated. RAG gives these LLMs a real-time, searchable “library” of information. So, when you ask a question, the RAG system first retrieves relevant information from this library and then augments (adds to) your question with that info. Finally, the LLM uses both its own knowledge and this fresh, retrieved context to generate an awesome response.

How RAG Pulls Off Its Magic Trick

RAG isn’t just one big thing; it’s a clever combination of a few key pieces working together. Here’s the three-step dance:

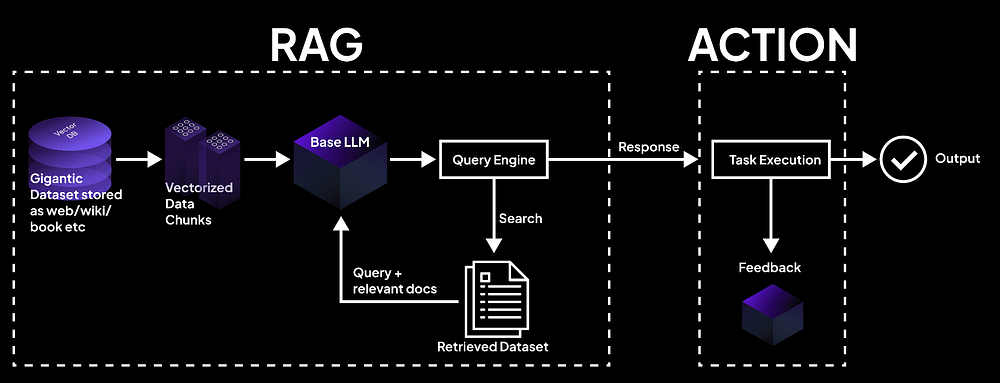

- Retrieval: When you type in your question, the RAG system first goes “hunting” for relevant documents in its knowledge base. It’s like a super-fast librarian looking for the right book. It does this by converting your question and all the documents into special “numerical codes” (called embeddings) that help it find things with similar meanings, not just exact words.

- Augmentation: Once it finds the most useful documents or passages, it doesn’t just pass them directly to the AI. Instead, it combines these retrieved pieces of info with your original question. This creates a much richer, more detailed “prompt” for the AI to work with. It’s like giving the AI a helpful cheat sheet.

- Generation: Finally, the powerful Language Model (the “brain” of the AI) takes this enriched prompt and uses both its existing knowledge and the newly retrieved information to cook up your answer. This ensures the response is accurate, detailed, and directly related to the specific context you’re asking about.

The Building Blocks of a RAG System

To make this magic happen, you need a few core components:

- Knowledge Base: This is your “library” — it could be documents, databases, websites, or anything with organized information. The better and more complete this library is, the smarter your RAG system will be.

- Embedding Model: This is the clever bit that turns plain text into those “numerical codes” (embeddings) so the system can understand the meaning behind words and find similar concepts.

- Vector Database: Think of this as a super-organized card catalog for your embeddings. It’s specially designed to store and quickly search through these numerical representations. Popular examples are like Pinecone, Weaviate, or Chroma.

- Language Model (LLM): This is the actual AI that generates the response, like GPT-4, Claude, or open-source models like LLaMA.

Why RAG is a Big Deal: The Superpowers

RAG isn’t just a techy buzzword; it brings some serious advantages to the AI table:

- Always Up-to-Date: Unlike regular AI models that are stuck with the info they learned during training (which could be years old!), RAG systems can access current information. Just update the knowledge base, and boom, your AI knows the latest news.

- Way More Accurate: Ever had an AI just make stuff up (we call these “hallucinations”)? RAG drastically cuts down on this! By pulling info from real sources, it gives you factually accurate answers. Plus, it can even tell you where it got the information from, which builds trust.

- Niche Expertise: Want an AI that’s an expert in, say, obscure medieval pottery techniques? With RAG, you can feed it all your specific documents on that topic, and suddenly, your AI is a pottery guru, even if it wasn’t trained on that info initially.

- Cheaper Customization: Instead of spending a fortune retraining a massive AI model, you can often customize a RAG system just by updating its knowledge base. This is a huge win for smaller companies or specific projects.

- Transparent Answers: Since RAG can point to its sources, you can actually see why the AI gave a certain answer. This is fantastic for understanding and trusting AI.

Where You’ll See RAG in Action

RAG isn’t just for tech geeks; it’s showing up in everyday (and not-so-everyday) applications:

- Customer Support: Chatbots that can instantly find answers in product manuals and FAQs, making your customer service experience much smoother.

- Legal & Medical: Helping lawyers quickly find relevant cases or doctors sift through vast amounts of medical literature for diagnosis.

- Company Brains: Making it easy for employees to find internal policies, procedures, or company history.

- Learning Tools: AI tutors that can pull information from textbooks and research papers to help students learn.

RAG’s Kryptonite: The Challenges

While RAG is amazing, it’s not without its quirks:

- Retrieval Must Be Spot-On: If the system pulls up irrelevant or bad information, the AI’s answer will suffer. It’s like having a researcher who brings you the wrong books.

- Keeping the Library Tidy: That knowledge base needs constant care. If it’s outdated or messy, the system won’t perform well.

- A Bit More Costly to Run: Because it’s doing extra searching, RAG can sometimes be a bit slower and use more computing power than a simple AI model.

- Context Overload: Even the smartest AI has a limit to how much information it can process at once. If you retrieve too much, it might get confused.

Tips for Making RAG Shine

If you’re thinking of using RAG, here are some pro tips:

- Pick the Right Approach: Simple questions might need a basic setup, but complex problems could require a more advanced RAG system.

- Clean Up Your Library: Make sure your documents are organized, up-to-date, and easy for the system to understand. Break them into smaller, digestible chunks if needed.

- Tweak the Search: Play around with how the system finds information. A little fine-tuning can make a big difference in accuracy.

- Check the Answers: Always have a way to monitor and check the quality of the retrieved info and the AI’s final answers. Human feedback is super valuable here.

- Plan for Growth: Think about how your system will handle more documents or more users down the line.

The Future is RAG-Powered!

RAG is just getting started. We’ll likely see even smarter ways for AIs to find information, perhaps even understanding images and videos. They’ll get even better at thinking through the retrieved info. As RAG tools become easier to use, more and more businesses will jump on board.

The Bottom Line

RAG is a massive leap forward for AI. It tackles some of the biggest problems with traditional AI, making systems more truthful, relevant, and adaptable. If you’re a business or just an enthusiast looking to build AI that you can genuinely trust with specific, up-to-date information, RAG isn’t just an option — it’s likely the best path forward.

It’s not just a cool piece of tech; it’s a fundamental shift towards AI that truly helps us by giving us reliable, context-aware answers. So, next time your AI seems impossibly smart, give a little nod to RAG — the unsung hero of accurate AI information processing!

What aspects of RAG are you most excited to explore further?

Sources: k21academy.com, superagi.com, wikipedia.com

No comments:

Post a Comment