The AI Rumble: Gemini vs. GPT-4.5 — Who’s Got the Upper Hand?

Alright, let’s talk about AI. Remember when it felt like something straight out of a sci-fi movie? Well, friends, we’re living it. Every other week, it seems like Google or OpenAI drops a new bombshell, a fresh AI model that makes our jaws hit the floor. It’s like watching a high-stakes, super-fast Formula 1 race where the cars are constantly upgrading mid-lap, and the drivers are secretly swapping out engines between pit stops. The big question on everyone’s mind: Who’s winning the AI race — Google’s Gemini or OpenAI’s GPT?

It’s a hot topic, right? Especially here in India, where everyone’s buzzing about how AI is changing everything from our daily commutes to how we do business. We’ve got Google pushing hard with its Gemini family, and OpenAI, the folks who brought us ChatGPT, coming out swinging with their latest GPT models. So, let’s peel back the layers, ignore some of the corporate jargon, and dive into what really makes these AI titans tick. Is there a clear winner, or are they just excelling at different things? Let’s find out!

Meet the Contenders: The AI Heavyweights

First up, let’s get acquainted with our main fighters. Both Google and OpenAI have invested billions and countless hours into building these super-smart brains, and their latest iterations are truly something to behold.

Google’s Gemini Family: The Thinking Machines

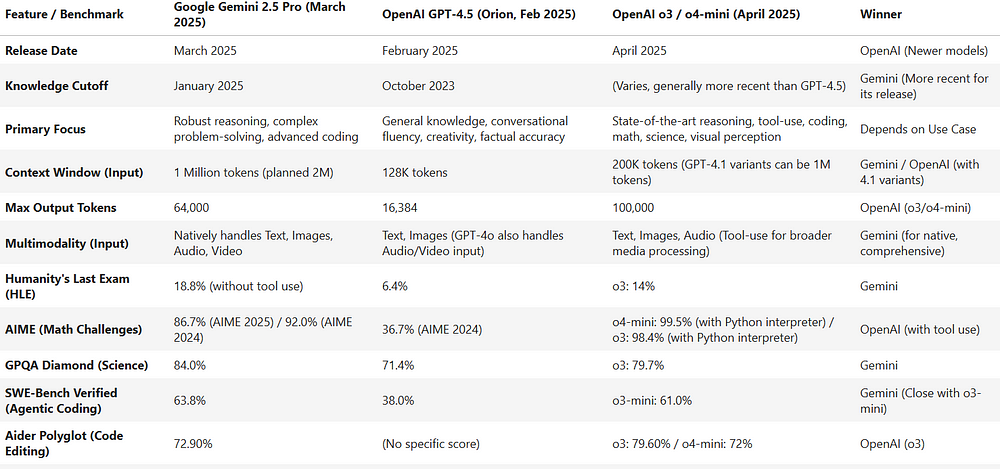

Google, the search giant, has been in the AI game for a long time, but Gemini is their true statement piece. When we talk about their top-tier model right now, we’re looking at Gemini 2.5 Pro, which officially rolled out in March 2025. Google has been bold in calling it their “most intelligent AI model,” and after digging into it, you can see why.

- Reasoning Powerhouse: If you’ve got a complex problem that needs serious brainpower, Gemini 2.5 Pro is designed for it. Think of it as that brilliant friend who can dissect a tricky logical puzzle or explain advanced quantum physics in a way that actually makes sense. It excels in math, science, and just generally connecting the dots. Google even introduced a “Deep Think” mode, which sounds exactly like what it is: the model takes a moment to really mull things over, leading to incredibly accurate and insightful responses. It’s not about speed here, it’s about depth.

- The Context Window King: This is where Gemini 2.5 Pro truly shines. It boasts a mind-boggling 1 million token context window. What does that even mean? Imagine feeding an AI an entire library — hundreds of thousands of words, whole books, massive codebases, long research papers, or years of chat logs — and it remembers everything and can instantly recall any part of it. This is huge for developers, researchers, or anyone dealing with vast amounts of information. Google’s even planning to expand this to 2 million tokens soon, which is just insane!

- True Multimodality: This is another biggie. Gemini isn’t just about text. It natively understands and processes information from text, images, audio, and even video. This means you can show it a video of a cricket match, ask it to analyze a specific player’s technique, and it gets it. Or show it a medical scan and ask for potential anomalies. It truly sees and hears the world in a more holistic way, not just converting everything to text first.

- Coding Prowess: If you’re a developer, pay attention. Gemini 2.5 Pro has shown some seriously impressive chops in coding benchmarks like Aider Polyglot (for code editing) and SWE-Bench Verified (for agentic coding). It’s not just generating snippets; it’s understanding complex codebases and making intelligent edits.

Then there’s Gemini 2.5 Flash. Think of this as Gemini’s agile younger sibling. It’s designed to be fast, efficient, and cost-effective. While not as “deep-thinking” as Pro, it’s perfect for tasks that need quick, accurate responses without breaking the bank — like summarizing articles, handling customer service chats, or extracting specific data points. And the generally available Gemini 2.0 Flash also offers improved capabilities and that crucial 1M token context window.

OpenAI’s GPT Family: The Conversational Maestros

OpenAI exploded onto the scene with ChatGPT and hasn’t really slowed down since. Their models are known for being incredibly articulate, creative, and just genuinely pleasant to interact with. Their current front-runner in this heavyweight bout is GPT-4.5, codenamed “Orion,” which was released in February 2025.

- Conversational King (and Queen!): If you’ve ever chatted with a GPT model, you know it feels uncannily human. GPT-4.5 is described as OpenAI’s “largest and best model for chat,” with enhanced general knowledge and a flair for creative expression. It has a fantastic grasp of conversational nuances, emotional intelligence, and even “aesthetic intuition,” making it brilliant for creative writing, brainstorming design ideas, or just having a free-flowing, natural conversation.

- Factual Accuracy: GPT-4.5 has a strong reputation for factual consistency and general knowledge. While no AI is perfect, it’s often a go-to for quick, reliable information.

- Multimodality (with nuance): GPT-4.5 can process both text and image input. You can upload an image and ask it questions about it. However, unlike Gemini’s native understanding of audio and video, some of the initial GPT-4.5 descriptions didn’t emphasize that immediate native understanding for all modalities. Though, OpenAI’s GPT-4o (released May 2024), which is also a multimodal model, does handle text, audio, and images as input, and generates text and audio output, bridging some of that gap.

- Context Window: GPT-4.5 offers a 128K token context window. This is great — it means it can handle pretty substantial documents and conversations. But when you put it next to Gemini 2.5 Pro’s 1 million, it’s a noticeable difference, like having a big table versus a massive conference hall for all your notes.

Now, here’s where it gets interesting and why this “race” is so dynamic. OpenAI hasn’t rested on GPT-4.5 alone. They’ve recently released even newer, specialized models that are shifting the landscape:

- GPT-4.1, GPT-4.1 mini, and nano: These dropped in April 2025 and are reportedly outperforming even GPT-4o in areas like coding and instruction following. Crucially, they also come with a larger context window, some hitting up to 1 million tokens, directly challenging Gemini’s lead there. This shows how quickly things are evolving — capabilities jump from one model to the next in a blink!

- OpenAI o3 and o4-mini: These are their latest “reasoning models,” also from April 2025. The big idea here is that they’re designed to “think for longer before responding.” Imagine an AI that actually pauses, ponders, and then gives you a super-thought-out answer. These are being hailed as the “smartest models OpenAI has released to date” for tasks in coding, math, science, and visual perception. They even boast the ability to agentically use tools within ChatGPT, meaning they can decide to run a search, use a calculator, or interact with other software to solve a problem — that’s a huge step towards true AI agency.

The Head-to-Head: Battle of the Benchmarks

Alright, enough with the intros. Let’s get to the nitty-gritty. Who’s actually performing better in the crucial tests? We’re looking at some recent, objective benchmarks here.

Reasoning & Problem-Solving: The True Test of Brains

- Humanity’s Last Exam (HLE): This isn’t your average pop quiz; it’s designed to test expert-level reasoning. Gemini 2.5 Pro scored 18.8%, while GPT-4.5 managed 6.4%. That’s a pretty clear win for Gemini. It suggests Gemini is better at grappling with abstract concepts and making complex deductions.

- AIME (American Invitational Mathematics Examination): For advanced math, Gemini 2.5 Pro hit 86.7% on the 2025 AIME. While specific GPT-4.5 scores aren’t widely publicized for this exact benchmark, OpenAI’s newer o4-mini with tools has shown very high scores. This indicates that while Gemini might have superior internal math reasoning, OpenAI’s models are getting incredibly good at using tools to solve math problems, which is often how humans do it anyway!

- GPQA Diamond (General Purpose Question Answering — Science): Again, Gemini 2.5 Pro (84.0%) nudges ahead of GPT-4.5 (71.4%). So, for tricky science questions, Gemini seems to have a deeper understanding.

Coding & Software Engineering: Who Builds the Best Code?

- SWE-Bench Verified (Agentic Coding): This tests an AI’s ability to act as a software engineer and fix real-world bugs. Gemini 2.5 Pro nailed 63.8%, while GPT-4.5 got 38.0%. This is a significant lead for Gemini, suggesting it’s better at understanding large codebases and autonomously making correct changes.

- Aider Polyglot (Code Editing): When it comes to editing existing code, Gemini 2.5 Pro (74.0%) again outperformed GPT-4.5 (44.9%).

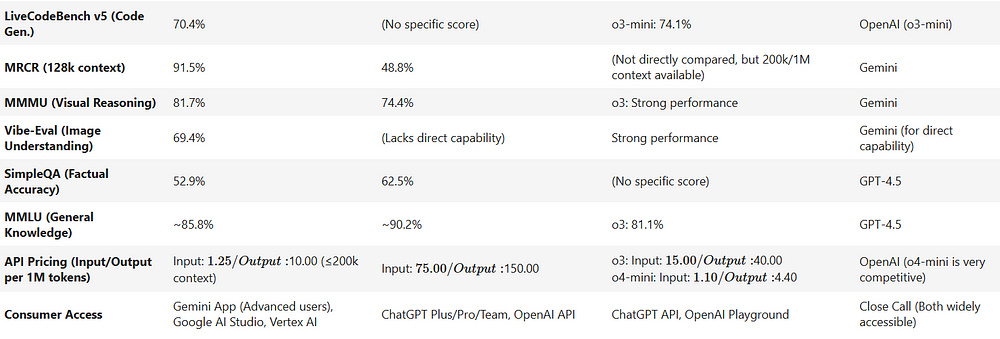

- LiveCodeBench v5 (Code Generation): For pure code generation, Gemini 2.5 Pro scored 70.4%. While a direct GPT-4.5 score isn’t always available here, one of OpenAI’s newer reasoning models, o3-mini, hit 74.1%. So, this particular area seems much tighter, with newer OpenAI models making strong strides.

- General Observation: While GPT-4.5 is excellent for instruction following and generating clean code snippets, Gemini appears to have a stronger grasp on complex, agentic coding tasks and understanding larger codebases. However, OpenAI’s latest ‘o-series’ models are rapidly closing the gap, and potentially even surpassing it in some coding scenarios.

Long Context Handling: Who Has the Best Memory?

- MRCR (Multi-Document Reading Comprehension): This tests how well an AI understands information across very long texts. At 128k tokens, Gemini 2.5 Pro scored 91.5% with near-perfect recall, while GPT-4.5 managed 48.8%. This is a massive win for Gemini. Its 1 million token context window isn’t just a number; it translates directly into superior performance when dealing with huge amounts of data. It’s like Gemini has a photographic memory for libraries, while GPT-4.5 has one for well-stocked bookshelves.

Multimodality & Vision: Seeing is Believing

- MMMU (Massive Multitask Multimodal Understanding): For visual reasoning, Gemini 2.5 Pro (81.7%) edges out GPT-4.5 (74.4%).

- Vibe-Eval (Image Understanding): Here, Gemini 2.5 Pro scored 69.4%, while GPT-4.5 generally lacks this specific capability. This confirms Gemini’s strength in understanding and interpreting images.

- Important Note: While Gemini seems to have a more native, comprehensive multimodal approach (text, image, audio, video all at once), OpenAI’s GPT-4o does handle text, images, and audio as input, making them highly capable in multimodal interactions too. The difference is subtle and might be in the way they process these inputs.

Fact-Checking & Accuracy: Who Gets it Right?

- SimpleQA: For straightforward factual questions, GPT-4.5 (62.5%) actually shows a slight lead over Gemini 2.5 Pro (52.9%). This suggests that for quick, factual consistency, GPT-4.5 might be marginally more reliable.

Conversational Fluency & Creativity: Who’s the Better Chatter?

- MMLU (Massive Multitask Language Understanding — General Knowledge): GPT-4.5 (~90.2%) has a narrow lead over Gemini 2.5 Pro (~85.8%). This implies GPT-4.5 has a broader general knowledge base, which contributes to its incredibly natural and fluent conversational style.

- User Reviews: This is where the benchmarks sometimes diverge from real-world feel. Many users still praise GPT for its creative writing, emotional intelligence, and smooth, human-like dialogue. Gemini, on the other hand, often gets kudos for its deeper critical feedback and more nuanced, analytical responses, sometimes described as being a better “thought partner” for complex ideas.

The “Real World” Battle: Beyond Benchmarks

Benchmarks are great, but how do these AI models fare in the wild? And what about the practicalities like cost?

Pricing: The Wallet Factor

- This is a significant point for many developers and businesses. Gemini 2.5 Pro is currently free to use via API. Yes, you read that right — free!

- On the flip side, GPT-4.5 comes with a price tag. ChatGPT Plus is around $20/month, and API access can be quite costly (think $75 input / $150 output per 1 million tokens for GPT-4.5).

- Verdict on Cost: For now, Google has a huge advantage here, making Gemini significantly more accessible for experimentation and development.

Real-World Use Cases: Where Do They Shine?

When Gemini is Your Champion:

- Deep Analysis & Research: Crunching massive datasets, summarizing years of research papers, analyzing complex financial reports.

- Advanced Coding & Debugging: When you need an AI to truly understand a codebase, find complex bugs, or generate sophisticated new features.

- Scientific & Medical Breakthroughs: Interpreting medical images, assisting in drug discovery, analyzing scientific data.

- Long-Form Content Generation (with deep context): Generating lengthy reports or articles that require synthesizing tons of information from various sources.

When GPT-4.5 (and the o-series) is Your Go-To:

- Creative Content Creation: Marketing copy, blog posts, scripts, poems, stories — anything that needs a touch of flair and human-like creativity.

- Customer Support & Conversational AI: Building highly natural and empathetic chatbots that can handle complex dialogues.

- Design & Brainstorming: Generating ideas for visual concepts, marketing campaigns, or product features.

- General Chat & Quick Q&A: When you just need a quick, accurate answer or a friendly AI companion for brainstorming.

- Agentic Workflows (with o-series): Tasks where the AI needs to plan steps, use tools, and execute multi-stage commands.

User Sentiments & Evolving Experiences:

- It’s fascinating how user opinions constantly shift as new models drop. Older reviews often credited GPT with better coding and logic, but now, with Gemini’s recent leaps, that narrative is changing rapidly.

- Users sometimes describe GPT’s default writing style as a bit “over-the-top” or overly enthusiastic, while Gemini is praised for its “more nuanced dialogue” and “critical feedback.” Many developers love how Gemini seems to “remember code I’ve given it even later in the conversation,” highlighting its long-context strength.

- However, the recent release of OpenAI’s “o-series” models (o3, o4-mini, and 4.1 variants) has dramatically improved OpenAI’s standing in coding, reasoning, and longer context comprehension. This means user experiences are literally changing by the month, if not week!

The Evolving “Race”: No Finish Line Yet

So, after all this, who’s “winning” the AI race? The honest truth is, it’s not a clear-cut victory for either side. This isn’t a 100-meter dash; it’s an ultra-marathon that’s constantly evolving.

- Specialization vs. General Intelligence: Both Google and OpenAI are pushing towards incredibly powerful, multimodal general intelligences. But their current strengths often lie in slightly different areas. Gemini seems to be pulling ahead in deep analytical thinking, complex problem-solving, and truly understanding massive contexts. OpenAI, with GPT-4.5 and its newer ‘o-series’ and 4.1 models, continues to excel in natural language understanding, creativity, and increasingly, sophisticated reasoning and tool-use.

- The Pace of Innovation: What’s true today might be old news tomorrow. The sheer speed at which these advancements are happening is breathtaking. A new benchmark or a new model release can flip the script overnight.

- It’s About Your Needs: The “best” AI isn’t universal. It’s the one that best fits your specific needs, your project, or your preferred workflow. Do you need an AI that can dissect complex scientific data and write perfect code for it? Gemini might be your guy. Do you need an AI that can brainstorm creative marketing campaigns, write compelling stories, and hold a natural conversation? GPT might be your go-to.

The Future is Bright (and Fast!)

The rivalry between Google and OpenAI is incredibly exciting. It’s pushing the boundaries of what’s possible with artificial intelligence, leading to breakthroughs that will benefit all of us. As users, we get access to increasingly powerful, versatile, and accessible tools that can truly augment our capabilities.

So, while we might not have a definitive “winner” in the AI race today, one thing is for sure: the finish line is nowhere in sight. And honestly, that’s the most thrilling part. Who knows what incredible advancements Google and OpenAI will surprise us with next week? The future of AI is bright, fast, and constantly reshaping our world. Stay tuned!

Sources: dev.to, wikipedia.com, linkedin.com

Authored by: Shorya Bisht

No comments:

Post a Comment